What’s New in iOS 13? A Quick Summary for App Developers

Author : John Prabhu 17th Sep 2019

Apple announced iOS 13 update at WWDC and running its developer and public betas this summer. After its iPhone event, Apple announced that iOS 13 and watchOS 6 will all be officially released.

Currently, iOS 13.1 developer beta 3 available to download right now on iPhone and iPad.

Let’s take a brief look at some of the features available for developers on iOS 13

Dark Mode

The new iOS 13 update offers dark mode integrated throughout iOS, and Xcode 11 gives you powerful tools to easily support dark mode in your apps.

Now the interface builder offers an easy way to switch your designs and previews between light and dark. Also, SwiftUI now lets you preview both modes side by side. Assets can be labeled in the Asset catalogs for both light and dark mode. Switching in and out of dark more is now available while debugging.

AR & Machine Learning (ML)

With the update, the Natural Language Framework analyzes the natural language text and projects its language-specific metadata. Natural Language Framework now offers features such as script identification, lemmatization, tokenization, parts-of-speech tagging, and named entity recognition.

The ARKit 3 offers people occlusion capabilities to place AR objects in front of and behind the subject in the real world. Now, ARKit Face Tracking can track up to 3 faces at once. Also, ARKit 3 enables you to simultaneously use front and rear cameras. Some of the additional improvements in iOS 13 include detecting up to 100 images in one go and estimate the image size.

Create ML3 framework combined with Natural Language Framework lets you train and deploy custom natural language models. Also, the combination now offers word embeddings, sentiment classification, and a text catalog.

Moreover, Create ML3 lets you build model templates that are capable of doing object detection, activity and sound classification, and providing recommendations. Also, multi-model training is now possible by taking advantage of different datasets.

Swift API now lets you create Machine Learning models optimized for tasks such as regression, image classification, word tagging, and sentence classification.

Apple Sign-In & Camera

The new iOS 13 update bundles an Apple Sign-In in the module that helps users to sign-in to an app using their Apple ID or their Touch ID or Face ID. The new ‘antifraud’ feature uses on-device machine learning to determine whether a person is real or not.

The Portrait Segmentation API empowers you to create new effects to the portrait photos shot on your camera app. Now, AVCaptureMultiCamSession lets you record a video simultaneously on the front and rear cameras and AVSemanticSegmentationMatte enables you to capture hair, skin, and teeth segmentation mattes in the photo.

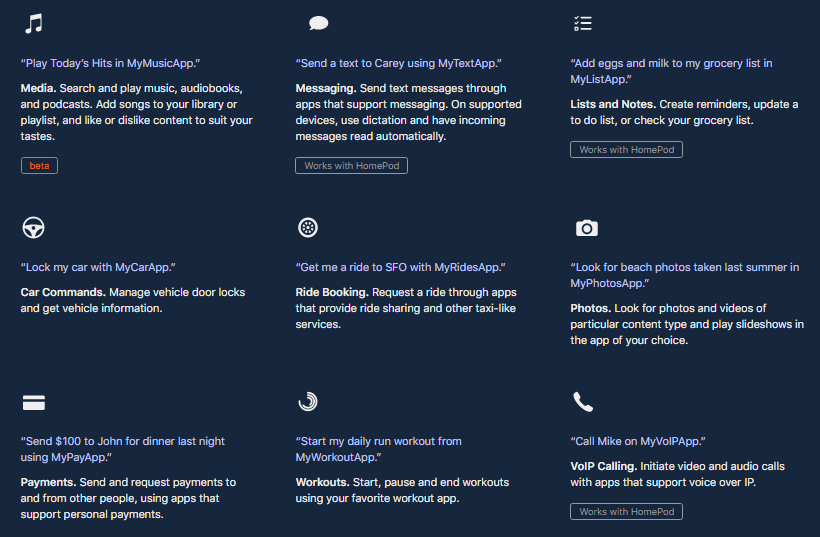

Siri

Shortcuts API offers new conversational shortcuts, customization, and media playback experiences. SiriKit enables apps to communicate with Siri even when the app isn’t running. Also, it suggests relevant shortcuts based on the user’s routines in the new Shortcuts app. Shortcuts app enables users to pair their shortcuts with actions from other apps. In addition, the new ‘Automations’ tab allows users to set up shortcuts to run automatically.

We, at TechAffinity, are a mobile application development company and have been diligently developing iOS applications complying with all the guidelines specified by Apple. All our iOS apps make the best use of the updated iOS features to provide the best-in-class user experience.

Upgrade your app to the new iOS 13 standards and incorporate all the updated features available with iOS 13. Get in touch with us to make significant improvements to iOS apps. You can also email us your queries to media@techaffinity.com regarding the possible upgrades to your app.